总结 本文对NVMe over TCP的write和read命令下发流程进行了梳理

1. 环境 本文只针对Linux5.4.0版本的nvme内核模块源代码,使用命令

1 2 sudo nvme io-passthru /dev/nvme1n1 --opcode=1 --namespace -id=1 --data-len=4096 --write --cdw10=5120 --cdw11=0 --cdw12=7 -s -i 123. txt sudo nvme io-passthru /dev/nvme1n1 --opcode=2 --namespace -id=1 --data-len=4096 --write --cdw10=5120 --cdw11=0 --cdw12=7 -s -i 456. txt

下发write和read命令,从nvme_ioctl函数开始对write进行一个梳理过程

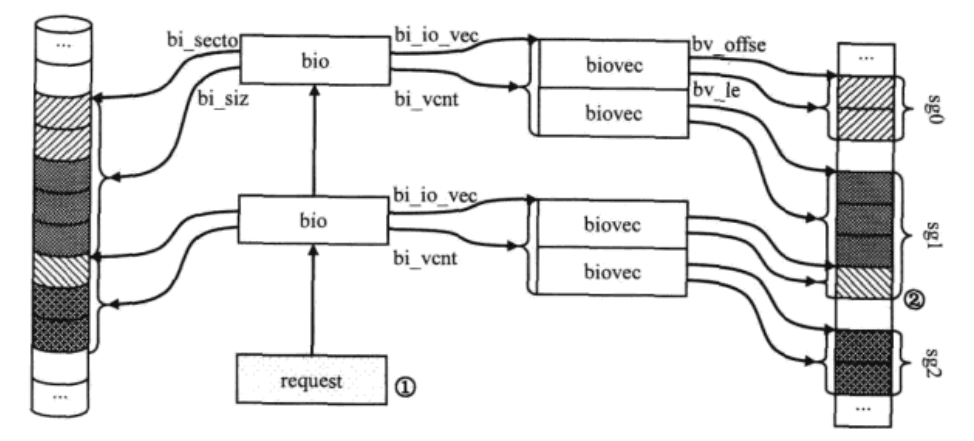

2. 一些前置知识 NVMe over TCP传输时,使用的时SGL而不是PRP

3. host端与target端IO调用栈 我将所有函数都打了printk,write命令下发时,host端与target端的函数调用关系如下:

Host端:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 [ 627.604513 ] nvme_dev_release [ 709.055115 ] *****************************nvme_ioctl [ 709.055116 ] nvme_get_ns_from_disk [ 709.055117 ] nvme_user_cmd [ 709.055120 ] nvme_passthru_start [ 709.055120 ] nvme_to_user_ptr [ 709.055121 ] nvme_to_user_ptr [ 709.055121 ] nvme_submit_user_cmd [ 709.055122 ] nvme_alloc_request [ 709.055128 ] nvme_tcp_queue_rq [ 709.055128 ] nvme_tcp_setup_cmd_pdu [ 709.055129 ] nvme_setup_cmd [ 709.055129 ] nvme_tcp_map_data [ 709.055129 ] nvme_tcp_set_sg_inline [ 709.055131 ] ##### nvme_tcp_queue_request [ 709.055135 ] *************************************nvme_tcp_io_work [ 709.055136 ] nvme_tcp_try_send [ 709.055136 ] nvme_tcp_fetch_request [ 709.055136 ] nvme_tcp_try_send_cmd_pdu [ 709.055137 ] nvme_tcp_has_inline_data [ 709.055146 ] nvme_tcp_init_iter [ 709.055147 ] nvme_tcp_has_inline_data [ 709.055147 ] nvme_tcp_try_send_data [ 709.060325 ] nvme_tcp_data_ready [ 709.060327 ] nvme_tcp_advance_req [ 709.060328 ] nvme_tcp_try_recv [ 709.060328 ] nvme_tcp_recv_skb [ 709.060329 ] nvme_tcp_recv_pdu [ 709.060329 ] nvme_tcp_init_recv_ctx [ 709.060329 ] nvme_tcp_handle_comp [ 709.060330 ] nvme_tcp_process_nvme_cqe [ 709.060331 ] nvme_complete_rq [ 709.060331 ] nvme_error_status [ 709.060339 ] nvme_tcp_try_send [ 709.060339 ] nvme_tcp_fetch_request [ 709.060339 ] nvme_tcp_try_recv [ 709.060340 ] *************************************nvme_tcp_io_work [ 709.060341 ] nvme_tcp_try_send [ 709.060341 ] nvme_tcp_fetch_request [ 709.060341 ] nvme_tcp_try_recv

target端:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 [ 587.920537 ] nvmet_tcp_data_ready [ 587.920666 ] nvmet_tcp_io_work [ 587.920667 ] ********************************nvmet_tcp_try_recv [ 587.920667 ] nvmet_tcp_try_recv_one [ 587.920667 ] nvmet_tcp_try_recv_pdu [ 587.920672 ] nvmet_tcp_done_recv_pdu [ 587.920672 ] nvmet_tcp_get_cmd [ 587.920674 ] nvmet_req_init [ 587.920674 ] nvmet_parse_io_cmd [ 587.920675 ] nvmet_bdev_parse_io_cmd [ 587.920677 ] nvmet_tcp_map_data [ 587.920681 ] nvmet_tcp_map_pdu_iovec [ 587.920681 ] nvmet_tcp_try_recv_data [ 587.920683 ] nvmet_req_execute [ 587.920684 ] nvmet_bdev_execute_rw [ 587.920696 ] nvme_setup_cmd [ 587.920697 ] nvme_setup_rw [ 587.920711 ] nvmet_prepare_receive_pdu [ 587.920711 ] nvmet_tcp_try_recv_one [ 587.920711 ] nvmet_tcp_try_recv_pdu [ 587.920712 ] nvmet_tcp_try_send [ 587.920712 ] nvmet_tcp_try_send_one [ 587.920713 ] nvmet_tcp_fetch_cmd [ 587.920713 ] nvmet_tcp_process_resp_list [ 587.922352 ] nvme_cleanup_cmd [ 587.922353 ] nvme_complete_rq [ 587.922354 ] nvme_error_status [ 587.922356 ] nvmet_bio_done [ 587.922357 ] blk_to_nvme_status [ 587.922357 ] nvmet_req_complete [ 587.922358 ] __nvmet_req_complete [ 587.922359 ] nvmet_tcp_queue_response [ 587.922370 ] nvmet_tcp_io_work [ 587.922370 ] ********************************nvmet_tcp_try_recv [ 587.922371 ] nvmet_tcp_try_recv_one [ 587.922371 ] nvmet_tcp_try_recv_pdu [ 587.922373 ] nvmet_tcp_try_send [ 587.922374 ] nvmet_tcp_try_send_one [ 587.922374 ] nvmet_tcp_fetch_cmd [ 587.922374 ] nvmet_tcp_process_resp_list [ 587.922375 ] nvmet_setup_response_pdu [ 587.922375 ] nvmet_try_send_response [ 587.922430 ] nvmet_tcp_try_send_one [ 587.922431 ] nvmet_tcp_fetch_cmd [ 587.922431 ] nvmet_tcp_process_resp_list

read命令下发时,函数调用IO栈如下:

host端:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 [10119.754240 ] *****************************nvme_ioctl [10119.754241 ] nvme_get_ns_from_disk [10119.754246 ] nvme_user_cmd [10119.754248 ] nvme_passthru_start [10119.754249 ] nvme_to_user_ptr [10119.754250 ] nvme_to_user_ptr [10119.754250 ] nvme_submit_user_cmd [10119.754251 ] nvme_alloc_request [10119.754264 ] nvme_tcp_queue_rq [10119.754265 ] nvme_tcp_setup_cmd_pdu [10119.754265 ] nvme_setup_cmd [10119.754268 ] nvme_tcp_init_iter [10119.754269 ] nvme_tcp_map_data [10119.754269 ] nvme_tcp_set_sg_host_data [10119.754271 ] ##### nvme_tcp_queue_request [10119.754278 ] *************************************nvme_tcp_io_work [10119.754278 ] nvme_tcp_try_send [10119.754278 ] nvme_tcp_fetch_request [10119.754279 ] nvme_tcp_try_send_cmd_pdu [10119.754279 ] nvme_tcp_has_inline_data [10119.754363 ] nvme_tcp_done_send_req [10119.754364 ] nvme_tcp_has_inline_data [10119.754364 ] nvme_tcp_try_recv [10119.754365 ] nvme_tcp_try_send [10119.754365 ] nvme_tcp_fetch_request [10119.754366 ] nvme_tcp_try_recv [10119.760851 ] nvme_tcp_data_ready [10119.760860 ] nvme_tcp_data_ready [10119.760864 ] *************************************nvme_tcp_io_work [10119.760865 ] nvme_tcp_try_send [10119.760865 ] nvme_tcp_fetch_request [10119.760866 ] nvme_tcp_try_recv [10119.762412 ] nvme_tcp_recv_skb [10119.762412 ] nvme_tcp_recv_pdu [10119.762413 ] nvme_tcp_handle_c2h_data [10119.762415 ] nvme_tcp_recv_data [10119.762417 ] nvme_tcp_init_recv_ctx [10119.762417 ] nvme_tcp_recv_pdu [10119.762417 ] nvme_tcp_init_recv_ctx [10119.762418 ] nvme_tcp_handle_comp [10119.762418 ] nvme_tcp_process_nvme_cqe [10119.762419 ] nvme_complete_rq [10119.762419 ] nvme_error_status [10119.762426 ] nvme_tcp_try_send [10119.762426 ] nvme_tcp_fetch_request [10119.762427 ] nvme_tcp_try_recv

target端:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 [10113.557551 ] nvmet_tcp_io_work [10113.557552 ] ********************************nvmet_tcp_try_recv [10113.557552 ] nvmet_tcp_try_recv_one [10113.557553 ] nvmet_tcp_try_recv_pdu [10113.557562 ] nvmet_tcp_done_recv_pdu [10113.557562 ] nvmet_tcp_get_cmd [10113.557567 ] nvmet_req_init [10113.557567 ] nvmet_parse_io_cmd [10113.557576 ] nvmet_bdev_parse_io_cmd [10113.557579 ] nvmet_tcp_map_data [10113.557583 ] nvmet_req_execute [10113.557583 ] nvmet_bdev_execute_rw [10113.557607 ] nvme_setup_cmd [10113.557607 ] nvme_setup_rw [10113.557635 ] nvme_cleanup_cmd [10113.557636 ] nvme_complete_rq [10113.557637 ] nvme_error_status [10113.557637 ] blk_mq_end_request [10113.557638 ] nvmet_bio_done [10113.557638 ] blk_to_nvme_status [10113.557639 ] nvmet_req_complete [10113.557639 ] __nvmet_req_complete [10113.557640 ] nvmet_tcp_queue_response [10113.557645 ] nvmet_prepare_receive_pdu [10113.557645 ] nvmet_tcp_try_recv_one [10113.557646 ] nvmet_tcp_try_recv_pdu [10113.557646 ] nvmet_tcp_try_send [10113.557647 ] nvmet_tcp_try_send_one [10113.557647 ] nvmet_tcp_fetch_cmd [10113.557647 ] nvmet_tcp_process_resp_list [10113.557648 ] nvmet_setup_c2h_data_pdu [10113.557649 ] nvmet_try_send_data_pdu [10113.557653 ] nvmet_try_send_data [10113.557685 ] nvmet_setup_response_pdu [10113.557685 ] nvmet_try_send_response [10113.557686 ] nvmet_tcp_put_cmd [10113.557687 ] nvmet_tcp_try_send_one [10113.557687 ] nvmet_tcp_fetch_cmd [10113.557687 ] nvmet_tcp_process_resp_list [10113.557687 ] llist_del_all=NULL [10113.557688 ] nvmet_tcp_io_work [10113.557688 ] ********************************nvmet_tcp_try_recv [10113.557689 ] nvmet_tcp_try_recv_one [10113.557689 ] nvmet_tcp_try_recv_pdu [10113.557690 ] nvmet_tcp_try_send [10113.557690 ] nvmet_tcp_try_send_one [10113.557690 ] nvmet_tcp_fetch_cmd [10113.557691 ] nvmet_tcp_process_resp_list

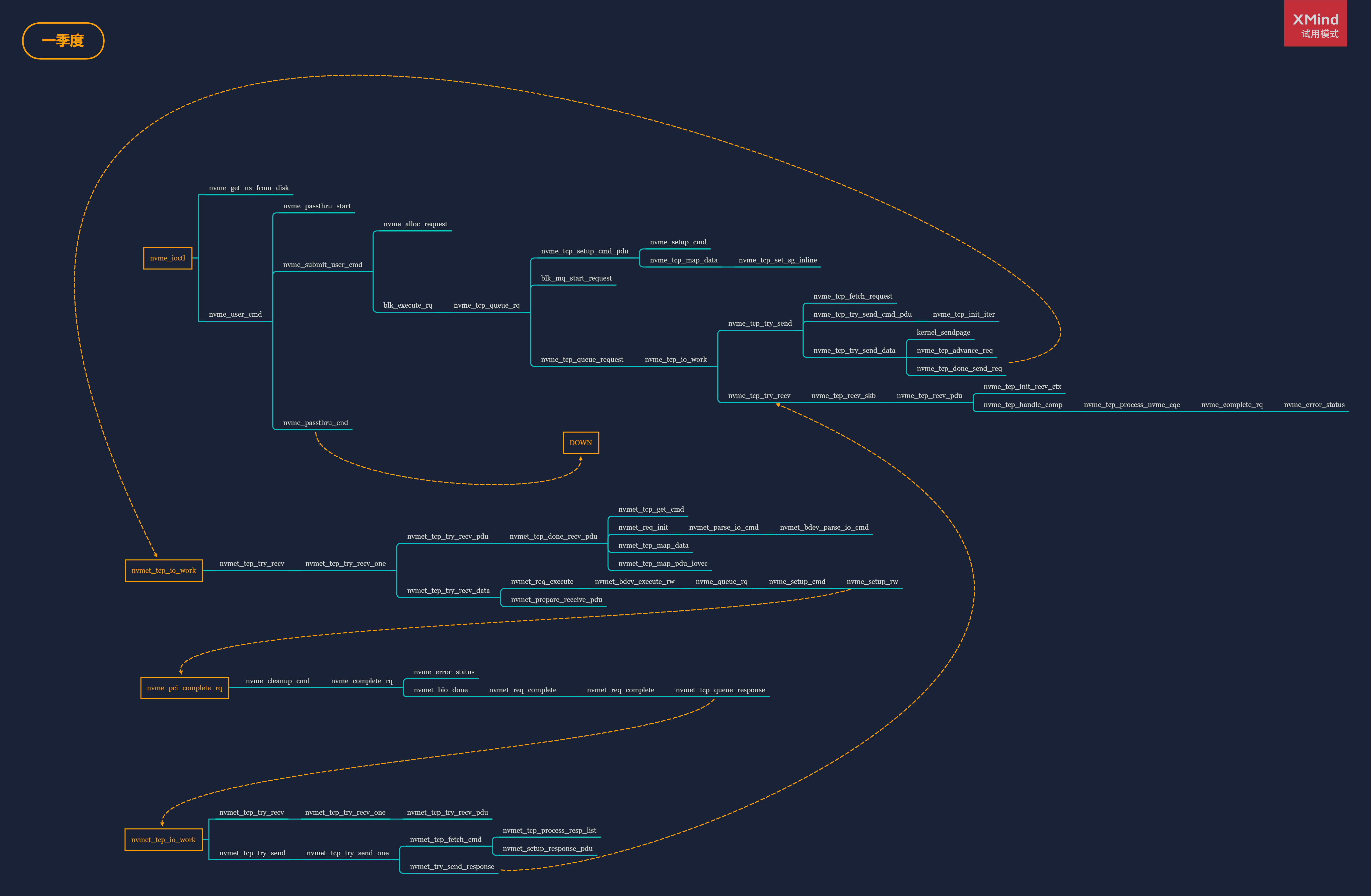

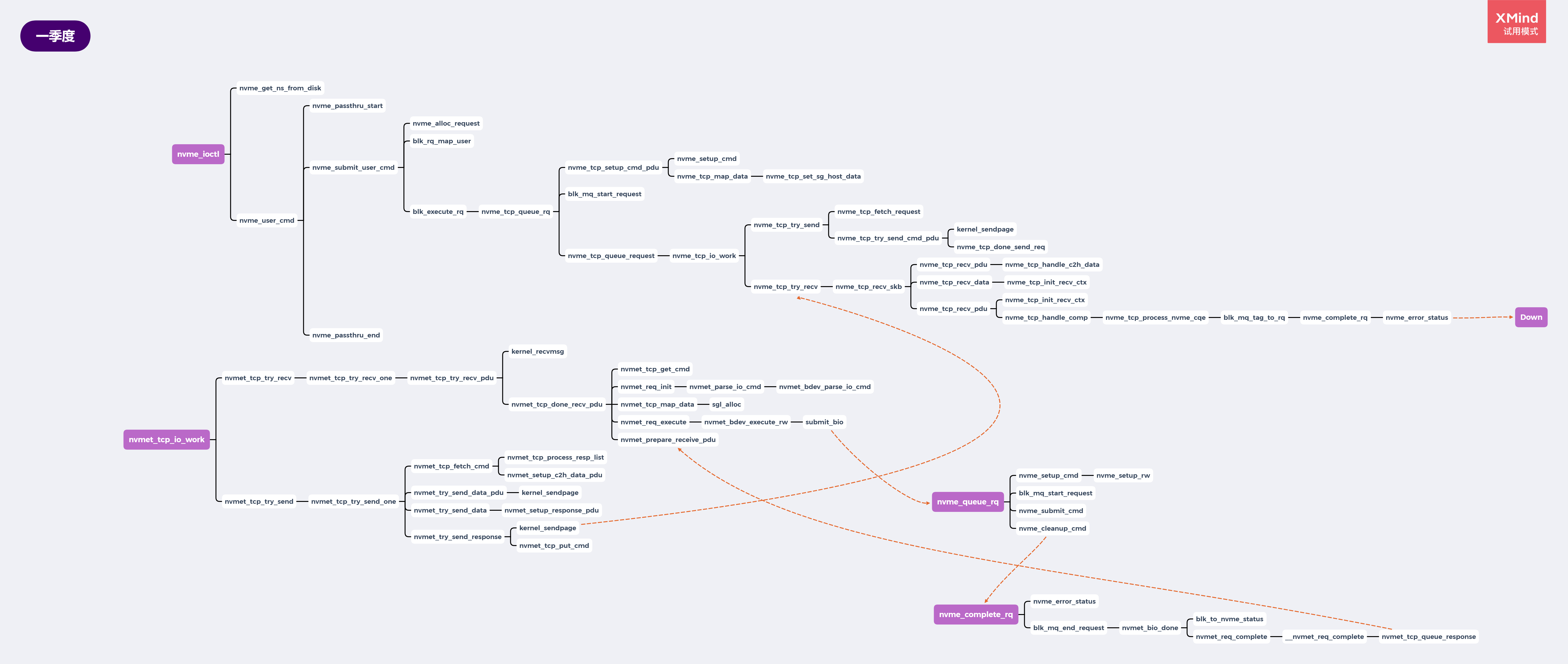

4. Write命令函数调用关系图 5. Read命令函数调用关系图 Write和Read命令下发的IO栈函数关系图如图所示,自己画的不是特别美观,看不清楚的可以下载下来然后放大

下面就以Write命令为例将函数按照顺序从上到下,从左到右,按箭头的顺序进行分析,Read命令类似

6. 函数具体分析 host端 nvme_ioctl 进入nvme_ioctl函数,传递由nvme-cli下发下来的写命令

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 static int nvme_ioctl (struct block_device *bdev, fmode_t mode, unsigned int cmd, unsigned long arg) struct nvme_ns_head *head =NULL ; void __user *argp = (void __user *)arg; struct nvme_ns *ns ; int srcu_idx, ret; ns = nvme_get_ns_from_disk(bdev->bd_disk, &head, &srcu_idx); if (unlikely(!ns)) return -EWOULDBLOCK; if (is_ctrl_ioctl(cmd)){ return nvme_handle_ctrl_ioctl(ns, cmd, argp, head, srcu_idx); } switch (cmd) { case NVME_IOCTL_ID: force_successful_syscall_return(); ret = ns->head->ns_id; break ; case NVME_IOCTL_IO_CMD: ret = nvme_user_cmd(ns->ctrl, ns, argp); break ; case NVME_IOCTL_SUBMIT_IO: ret = nvme_submit_io(ns, argp); break ; case NVME_IOCTL_IO64_CMD: ret = nvme_user_cmd64(ns->ctrl, ns, argp); break ; default : if (ns->ndev) ret = nvme_nvm_ioctl(ns, cmd, arg); else ret = -ENOTTY; } nvme_put_ns_from_disk(head, srcu_idx); return ret; }

由于是写命令,所以会进入nvme_user_cmd函数中进行命令下发

nvme_user_cmd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 static int nvme_user_cmd (struct nvme_ctrl *ctrl, struct nvme_ns *ns, struct nvme_passthru_cmd __user *ucmd) struct nvme_passthru_cmd cmd ; struct nvme_command c ; unsigned timeout = 0 ; u32 effects; u64 result; int status; if (!capable(CAP_SYS_ADMIN)) return -EACCES; if (copy_from_user(&cmd, ucmd, sizeof (cmd))) return -EFAULT; if (cmd.flags) return -EINVAL; memset (&c, 0 , sizeof (c)); c.common.opcode = cmd.opcode; c.common.flags = cmd.flags; c.common.nsid = cpu_to_le32(cmd.nsid); c.common.cdw2[0 ] = cpu_to_le32(cmd.cdw2); c.common.cdw2[1 ] = cpu_to_le32(cmd.cdw3); c.common.cdw10 = cpu_to_le32(cmd.cdw10); c.common.cdw11 = cpu_to_le32(cmd.cdw11); c.common.cdw12 = cpu_to_le32(cmd.cdw12); c.common.cdw13 = cpu_to_le32(cmd.cdw13); c.common.cdw14 = cpu_to_le32(cmd.cdw14); c.common.cdw15 = cpu_to_le32(cmd.cdw15); if (cmd.timeout_ms) timeout = msecs_to_jiffies(cmd.timeout_ms); effects = nvme_passthru_start(ctrl, ns, cmd.opcode); status = nvme_submit_user_cmd(ns ? ns->queue : ctrl->admin_q, &c, nvme_to_user_ptr(cmd.addr), cmd.data_len, nvme_to_user_ptr(cmd.metadata), cmd.metadata_len, 0 , &result, timeout); nvme_passthru_end(ctrl, effects); if (status >= 0 ) { if (put_user(result, &ucmd->result)){ return -EFAULT; } } return status; }

nvme_submit_user_cmd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 static int nvme_submit_user_cmd (struct request_queue *q, struct nvme_command *cmd, void __user *ubuffer, unsigned bufflen, void __user *meta_buffer, unsigned meta_len, u32 meta_seed, u64 *result, unsigned timeout) bool write = nvme_is_write(cmd); struct nvme_ns *ns = struct gendisk *disk =NULL ; struct request *req ; struct bio *bio =NULL ; void *meta = NULL ; int ret; req = nvme_alloc_request(q, cmd, 0 , NVME_QID_ANY); if (IS_ERR(req)){ return PTR_ERR(req); } req->timeout = timeout ? timeout : ADMIN_TIMEOUT; nvme_req(req)->flags |= NVME_REQ_USERCMD; if (ubuffer && bufflen) { ret = blk_rq_map_user(q, req, NULL , ubuffer, bufflen, GFP_KERNEL); if (ret) goto out; bio = req->bio; bio->bi_disk = disk; if (disk && meta_buffer && meta_len) { meta = nvme_add_user_metadata(bio, meta_buffer, meta_len, meta_seed, write); if (IS_ERR(meta)) { ret = PTR_ERR(meta); goto out_unmap; } req->cmd_flags |= REQ_INTEGRITY; } } blk_execute_rq(req->q, disk, req, 0 ); if (nvme_req(req)->flags & NVME_REQ_CANCELLED) ret = -EINTR; else ret = nvme_req(req)->status; if (result) *result = le64_to_cpu(nvme_req(req)->result.u64); if (meta && !ret && !write) { if (copy_to_user(meta_buffer, meta, meta_len)) ret = -EFAULT; } kfree(meta); out_unmap: if (bio) blk_rq_unmap_user(bio); out: blk_mq_free_request(req); return ret; }

nvme_tcp_queue_rq 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 static blk_status_t nvme_tcp_queue_rq (struct blk_mq_hw_ctx *hctx, const struct blk_mq_queue_data *bd) struct nvme_ns *ns =queue ->queuedata; struct nvme_tcp_queue *queue = struct request *rq = struct nvme_tcp_request *req = bool queue_ready = test_bit(NVME_TCP_Q_LIVE, &queue ->flags); blk_status_t ret; if (!nvmf_check_ready(&queue ->ctrl->ctrl, rq, queue_ready)) return nvmf_fail_nonready_command(&queue ->ctrl->ctrl, rq); ret = nvme_tcp_setup_cmd_pdu(ns, rq); if (unlikely(ret)) return ret; blk_mq_start_request(rq); nvme_tcp_queue_request(req); return BLK_STS_OK; }

nvme_tcp_setup_cmd_pdu 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 static blk_status_t nvme_tcp_setup_cmd_pdu (struct nvme_ns *ns, struct request *rq) struct nvme_tcp_request *req = struct nvme_tcp_cmd_pdu *pdu = struct nvme_tcp_queue *queue =queue ; u8 hdgst = nvme_tcp_hdgst_len(queue ), ddgst = 0 ; blk_status_t ret; ret = nvme_setup_cmd(ns, rq, &pdu->cmd); if (ret) return ret; req->state = NVME_TCP_SEND_CMD_PDU; req->offset = 0 ; req->data_sent = 0 ; req->pdu_len = 0 ; req->pdu_sent = 0 ; req->data_len = blk_rq_nr_phys_segments(rq) ? blk_rq_payload_bytes(rq) : 0 ; req->curr_bio = rq->bio; if (rq_data_dir(rq) == WRITE && req->data_len <= nvme_tcp_inline_data_size(queue )) req->pdu_len = req->data_len; else if (req->curr_bio) nvme_tcp_init_iter(req, READ); pdu->hdr.type = nvme_tcp_cmd; pdu->hdr.flags = 0 ; if (queue ->hdr_digest) pdu->hdr.flags |= NVME_TCP_F_HDGST; if (queue ->data_digest && req->pdu_len) { pdu->hdr.flags |= NVME_TCP_F_DDGST; ddgst = nvme_tcp_ddgst_len(queue ); } pdu->hdr.hlen = sizeof (*pdu); pdu->hdr.pdo = req->pdu_len ? pdu->hdr.hlen + hdgst : 0 ; pdu->hdr.plen = cpu_to_le32(pdu->hdr.hlen + hdgst + req->pdu_len + ddgst); ret = nvme_tcp_map_data(queue , rq); if (unlikely(ret)) { nvme_cleanup_cmd(rq); dev_err(queue ->ctrl->ctrl.device, "Failed to map data (%d)\n" , ret); return ret; } return 0 ; }

nvme_tcp_queue_request 1 2 3 4 5 6 7 8 9 10 11 12 static inline void nvme_tcp_queue_request (struct nvme_tcp_request *req) printk("##### nvme_tcp_queue_request" ); struct nvme_tcp_queue *queue =queue ; spin_lock(&queue ->lock); list_add_tail(&req->entry, &queue ->send_list); spin_unlock(&queue ->lock); queue_work_on(queue ->io_cpu, nvme_tcp_wq, &queue ->io_work); }

nvme_tcp_io_work 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 static void nvme_tcp_io_work (struct work_struct *w) struct nvme_tcp_queue *queue = container_of(w, struct nvme_tcp_queue, io_work); unsigned long deadline = jiffies + msecs_to_jiffies(1 ); do { bool pending = false ; int result; result = nvme_tcp_try_send(queue ); if (result > 0 ) { pending = true ; } else if (unlikely(result < 0 )) { dev_err(queue ->ctrl->ctrl.device, "failed to send request %d\n" , result); if ((result != -EPIPE) && (result != -ECONNRESET)){ nvme_tcp_fail_request(queue ->request); } nvme_tcp_done_send_req(queue ); return ; } result = nvme_tcp_try_recv(queue ); if (result > 0 ) pending = true ; if (!pending){ return ; } } while (!time_after(jiffies, deadline)); queue_work_on(queue ->io_cpu, nvme_tcp_wq, &queue ->io_work); }

nvme_tcp_try_send 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 static int nvme_tcp_try_send (struct nvme_tcp_queue *queue ) struct nvme_tcp_request *req ; int ret = 1 ; if (!queue ->request) { queue ->request = nvme_tcp_fetch_request(queue ); if (!queue ->request){ return 0 ; } } req = queue ->request; if (req->state == NVME_TCP_SEND_CMD_PDU) { ret = nvme_tcp_try_send_cmd_pdu(req); if (ret <= 0 ) goto done; if (!nvme_tcp_has_inline_data(req)){ return ret; } } if (req->state == NVME_TCP_SEND_H2C_PDU) { ret = nvme_tcp_try_send_data_pdu(req); if (ret <= 0 ) goto done; } if (req->state == NVME_TCP_SEND_DATA) { ret = nvme_tcp_try_send_data(req); if (ret <= 0 ) goto done; } if (req->state == NVME_TCP_SEND_DDGST) ret = nvme_tcp_try_send_ddgst(req); done: if (ret == -EAGAIN) ret = 0 ; return ret; }

nvme_tcp_try_send_cmd_pdu 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 static int nvme_tcp_try_send_cmd_pdu (struct nvme_tcp_request *req) struct nvme_tcp_queue *queue =queue ; struct nvme_tcp_cmd_pdu *pdu = bool inline_data = nvme_tcp_has_inline_data(req); int flags = MSG_DONTWAIT | (inline_data ? MSG_MORE : MSG_EOR); u8 hdgst = nvme_tcp_hdgst_len(queue ); int len = sizeof (*pdu) + hdgst - req->offset; int ret; if (queue ->hdr_digest && !req->offset) nvme_tcp_hdgst(queue ->snd_hash, pdu, sizeof (*pdu)); ret = kernel_sendpage(queue ->sock, virt_to_page(pdu), offset_in_page(pdu) + req->offset, len, flags); if (unlikely(ret <= 0 )) return ret; len -= ret; if (!len) { if (inline_data) { req->state = NVME_TCP_SEND_DATA; if (queue ->data_digest) crypto_ahash_init(queue ->snd_hash); nvme_tcp_init_iter(req, WRITE); } else { nvme_tcp_done_send_req(queue ); } return 1 ; } req->offset += ret; return -EAGAIN; }

nvme_tcp_try_send_data 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 static int nvme_tcp_try_send_data (struct nvme_tcp_request *req) struct nvme_tcp_queue *queue =queue ; while (true ) { struct page *page = size_t offset = nvme_tcp_req_cur_offset(req); size_t len = nvme_tcp_req_cur_length(req); bool last = nvme_tcp_pdu_last_send(req, len); int ret, flags = MSG_DONTWAIT; if (last && !queue ->data_digest) flags |= MSG_EOR; else flags |= MSG_MORE; if (sendpage_ok(page)) { ret = kernel_sendpage(queue ->sock, page, offset, len, flags); } else { ret = sock_no_sendpage(queue ->sock, page, offset, len, flags); } if (ret <= 0 ) return ret; nvme_tcp_advance_req(req, ret); if (queue ->data_digest) nvme_tcp_ddgst_update(queue ->snd_hash, page, offset, ret); if (last && ret == len) { if (queue ->data_digest) { nvme_tcp_ddgst_final(queue ->snd_hash, &req->ddgst); req->state = NVME_TCP_SEND_DDGST; req->offset = 0 ; } else { nvme_tcp_done_send_req(queue ); } return 1 ; } } return -EAGAIN; }

至此,Host端的数据发送已经结束(无DIGIST),之后按时间顺序转到Target端执行相关函数

target端 nvmet_tcp_io_work 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 static void nvmet_tcp_io_work (struct work_struct *w) struct nvmet_tcp_queue *queue = container_of(w, struct nvmet_tcp_queue, io_work); bool pending; int ret, ops = 0 ; do { pending = false ; ret = nvmet_tcp_try_recv(queue , NVMET_TCP_RECV_BUDGET, &ops); if (ret > 0 ) { pending = true ; } else if (ret < 0 ) { if (ret == -EPIPE || ret == -ECONNRESET) kernel_sock_shutdown(queue ->sock, SHUT_RDWR); else nvmet_tcp_fatal_error(queue ); return ; } ret = nvmet_tcp_try_send(queue , NVMET_TCP_SEND_BUDGET, &ops); if (ret > 0 ) { pending = true ; } else if (ret < 0 ) { if (ret == -EPIPE || ret == -ECONNRESET) kernel_sock_shutdown(queue ->sock, SHUT_RDWR); else nvmet_tcp_fatal_error(queue ); return ; } } while (pending && ops < NVMET_TCP_IO_WORK_BUDGET); if (pending) queue_work_on(queue ->cpu, nvmet_tcp_wq, &queue ->io_work); }

nvmet_tcp_try_recv 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 static int nvmet_tcp_try_recv (struct nvmet_tcp_queue *queue , int budget, int *recvs) int i, ret = 0 ; for (i = 0 ; i < budget; i++) { ret = nvmet_tcp_try_recv_one(queue ); if (ret <= 0 ) break ; (*recvs)++; } return ret; }

nvmet_tcp_try_recv_one 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 static int nvmet_tcp_try_recv_one (struct nvmet_tcp_queue *queue ) int result = 0 ; if (unlikely(queue ->rcv_state == NVMET_TCP_RECV_ERR)) return 0 ; if (queue ->rcv_state == NVMET_TCP_RECV_PDU) { result = nvmet_tcp_try_recv_pdu(queue ); if (result != 0 ) goto done_recv; } if (queue ->rcv_state == NVMET_TCP_RECV_DATA) { result = nvmet_tcp_try_recv_data(queue ); if (result != 0 ) goto done_recv; } if (queue ->rcv_state == NVMET_TCP_RECV_DDGST) { result = nvmet_tcp_try_recv_ddgst(queue ); if (result != 0 ) goto done_recv; } done_recv: if (result < 0 ) { if (result == -EAGAIN) return 0 ; return result; } return 1 ; }

nvmet_tcp_try_recv_pdu 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 static int nvmet_tcp_try_recv_pdu (struct nvmet_tcp_queue *queue ) struct nvme_tcp_hdr *hdr =queue ->pdu.cmd.hdr; int len; struct kvec iov ; struct msghdr msg = recv: iov.iov_base = (void *)&queue ->pdu + queue ->offset; iov.iov_len = queue ->left初始值为; len = kernel_recvmsg(queue ->sock, &msg, &iov, 1 , iov.iov_len, msg.msg_flags); if (unlikely(len < 0 )) return len; queue ->offset += len; queue ->left -= len; if (queue ->left) return -EAGAIN; if (queue ->offset == sizeof (struct nvme_tcp_hdr)) { u8 hdgst = nvmet_tcp_hdgst_len(queue ); if (unlikely(!nvmet_tcp_pdu_valid(hdr->type))) { pr_err("unexpected pdu type %d\n" , hdr->type); nvmet_tcp_fatal_error(queue ); return -EIO; } if (unlikely(hdr->hlen != nvmet_tcp_pdu_size(hdr->type))) { pr_err("pdu %d bad hlen %d\n" , hdr->type, hdr->hlen); return -EIO; } queue ->left = hdr->hlen - queue ->offset + hdgst; goto recv; } if (queue ->hdr_digest && nvmet_tcp_verify_hdgst(queue , &queue ->pdu, queue ->offset)) { nvmet_tcp_fatal_error(queue ); return -EPROTO; } if (queue ->data_digest && nvmet_tcp_check_ddgst(queue , &queue ->pdu)) { nvmet_tcp_fatal_error(queue ); return -EPROTO; } return nvmet_tcp_done_recv_pdu(queue ); }

nvmet_tcp_done_recv_pdu 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 static int nvmet_tcp_done_recv_pdu (struct nvmet_tcp_queue *queue ) struct nvme_tcp_hdr *hdr =queue ->pdu.cmd.hdr; struct nvme_command *nvme_cmd =queue ->pdu.cmd.cmd; struct nvmet_req *req ; int ret; if (unlikely(queue ->state == NVMET_TCP_Q_CONNECTING)) { if (hdr->type != nvme_tcp_icreq) { pr_err("unexpected pdu type (%d) before icreq\n" , hdr->type); nvmet_tcp_fatal_error(queue ); return -EPROTO; } return nvmet_tcp_handle_icreq(queue ); } if (hdr->type == nvme_tcp_h2c_data) { ret = nvmet_tcp_handle_h2c_data_pdu(queue ); if (unlikely(ret)) return ret; return 0 ; } queue ->cmd = nvmet_tcp_get_cmd(queue ); if (unlikely(!queue ->cmd)) { pr_err("queue %d: out of commands (%d) send_list_len: %d, opcode: %d" , queue ->idx, queue ->nr_cmds, queue ->send_list_len, nvme_cmd->common.opcode); nvmet_tcp_fatal_error(queue ); return -ENOMEM; } req = &queue ->cmd->req; memcpy (req->cmd, nvme_cmd, sizeof (*nvme_cmd)); if (unlikely(!nvmet_req_init(req, &queue ->nvme_cq, &queue ->nvme_sq, &nvmet_tcp_ops))) { pr_err("failed cmd %p id %d opcode %d, data_len: %d\n" , req->cmd, req->cmd->common.command_id, req->cmd->common.opcode, le32_to_cpu(req->cmd->common.dptr.sgl.length)); nvmet_tcp_handle_req_failure(queue , queue ->cmd, req); return -EAGAIN; } ret = nvmet_tcp_map_data(queue ->cmd); if (unlikely(ret)) { pr_err("queue %d: failed to map data\n" , queue ->idx); if (nvmet_tcp_has_inline_data(queue ->cmd)) nvmet_tcp_fatal_error(queue ); else nvmet_req_complete(req, ret); ret = -EAGAIN; goto out; } if (nvmet_tcp_need_data_in(queue ->cmd)) { if (nvmet_tcp_has_inline_data(queue ->cmd)) { queue ->rcv_state = NVMET_TCP_RECV_DATA; nvmet_tcp_map_pdu_iovec(queue ->cmd); return 0 ; } nvmet_tcp_queue_response(&queue ->cmd->req); goto out; } nvmet_req_execute(&queue ->cmd->req); out: nvmet_prepare_receive_pdu(queue ); return ret; }

nvmet_req_init 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 bool nvmet_req_init (struct nvmet_req *req, struct nvmet_cq *cq, struct nvmet_sq *sq, const struct nvmet_fabrics_ops *ops) u8 flags = req->cmd->common.flags; u16 status; req->cq = cq; req->sq = sq; req->ops = ops; req->sg = NULL ; req->sg_cnt = 0 ; req->transfer_len = 0 ; req->cqe->status = 0 ; req->cqe->sq_head = 0 ; req->ns = NULL ; req->error_loc = NVMET_NO_ERROR_LOC; req->error_slba = 0 ; if (unlikely(flags & (NVME_CMD_FUSE_FIRST | NVME_CMD_FUSE_SECOND)) || req->cmd->common.opcode == nvme_admin_ndp_execute) { req->error_loc = offsetof(struct nvme_common_command, flags); status = NVME_SC_INVALID_FIELD | NVME_SC_DNR; goto fail; } if (unlikely((flags & NVME_CMD_SGL_ALL) != NVME_CMD_SGL_METABUF)) { req->error_loc = offsetof(struct nvme_common_command, flags); status = NVME_SC_INVALID_FIELD | NVME_SC_DNR; printk("For fabrics, PSDT field shall describe metadata pointer (MPTR) status=%d" ,status); goto fail; } if (unlikely(!req->sq->ctrl)){ status = nvmet_parse_connect_cmd(req); } else if (likely(req->sq->qid != 0 )){ status = nvmet_parse_io_cmd(req); } else if (nvme_is_fabrics(req->cmd)){ status = nvmet_parse_fabrics_cmd(req); } else if (req->sq->ctrl->subsys->type == NVME_NQN_DISC){ status = nvmet_parse_discovery_cmd(req); } else { status = nvmet_parse_admin_cmd(req); } if (status) goto fail; trace_nvmet_req_init(req, req->cmd); if (unlikely(!percpu_ref_tryget_live(&sq->ref))) { status = NVME_SC_INVALID_FIELD | NVME_SC_DNR; printk("unlikely(!percpu_ref_tryget_live(&sq->ref)) status=%d" ,status); goto fail; } if (sq->ctrl) sq->ctrl->cmd_seen = true ; return true ; fail: __nvmet_req_complete(req, status); return false ; } EXPORT_SYMBOL_GPL(nvmet_req_init);

nvmet_parse_io_cmd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 static u16 nvmet_parse_io_cmd (struct nvmet_req *req) struct nvme_command *cmd = u16 ret; ret = nvmet_check_ctrl_status(req, cmd); if (unlikely(ret)) return ret; req->ns = nvmet_find_namespace(req->sq->ctrl, cmd->rw.nsid); if (unlikely(!req->ns)) { req->error_loc = offsetof(struct nvme_common_command, nsid); return NVME_SC_INVALID_NS | NVME_SC_DNR; } ret = nvmet_check_ana_state(req->port, req->ns); if (unlikely(ret)) { req->error_loc = offsetof(struct nvme_common_command, nsid); return ret; } ret = nvmet_io_cmd_check_access(req); if (unlikely(ret)) { req->error_loc = offsetof(struct nvme_common_command, nsid); return ret; } if (req->ns->file){ return nvmet_file_parse_io_cmd(req); } else { return nvmet_bdev_parse_io_cmd(req); } }

nvmet_bdev_parse_io_cmd 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 u16 nvmet_bdev_parse_io_cmd (struct nvmet_req *req) struct nvme_command *cmd = switch (cmd->common.opcode) { case nvme_cmd_read: case nvme_cmd_write: req->execute = nvmet_bdev_execute_rw; req->data_len = nvmet_rw_len(req); return 0 ; case nvme_cmd_flush: req->execute = nvmet_bdev_execute_flush; req->data_len = 0 ; return 0 ; case nvme_cmd_dsm: req->execute = nvmet_bdev_execute_dsm; req->data_len = (le32_to_cpu(cmd->dsm.nr) + 1 ) * sizeof (struct nvme_dsm_range); return 0 ; case nvme_cmd_write_zeroes: req->execute = nvmet_bdev_execute_write_zeroes; req->data_len = 0 ; return 0 ; default : pr_err("unhandled cmd %d on qid %d\n" , cmd->common.opcode, req->sq->qid); req->error_loc = offsetof(struct nvme_common_command, opcode); return NVME_SC_INVALID_OPCODE | NVME_SC_DNR; } }

nvmet_tcp_map_data 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 static int nvmet_tcp_map_data (struct nvmet_tcp_cmd *cmd) printk("nvmet_tcp_map_data" ); struct nvme_sgl_desc *sgl = u32 len = le32_to_cpu(sgl->length); if (!cmd->req.data_len) return 0 ; if (sgl->type == ((NVME_SGL_FMT_DATA_DESC << 4 ) | NVME_SGL_FMT_OFFSET)) { if (!nvme_is_write(cmd->req.cmd)) return NVME_SC_INVALID_FIELD | NVME_SC_DNR; if (len > cmd->req.port->inline_data_size) return NVME_SC_SGL_INVALID_OFFSET | NVME_SC_DNR; cmd->pdu_len = len; } cmd->req.transfer_len += len; cmd->req.sg = sgl_alloc(len, GFP_KERNEL, &cmd->req.sg_cnt); if (!cmd->req.sg) return NVME_SC_INTERNAL; cmd->cur_sg = cmd->req.sg; if (nvmet_tcp_has_data_in(cmd)) { cmd->iov = kmalloc_array(cmd->req.sg_cnt, sizeof (*cmd->iov), GFP_KERNEL); if (!cmd->iov) goto err; } return 0 ; err: sgl_free(cmd->req.sg); return NVME_SC_INTERNAL; }

nvmet_tcp_try_recv_data 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 static int nvmet_tcp_try_recv_data (struct nvmet_tcp_queue *queue ) struct nvmet_tcp_cmd *cmd =queue ->cmd; int ret; while (msg_data_left(&cmd->recv_msg)) { ret = sock_recvmsg(cmd->queue ->sock, &cmd->recv_msg, cmd->recv_msg.msg_flags); if (ret <= 0 ) return ret; cmd->pdu_recv += ret; cmd->rbytes_done += ret; } nvmet_tcp_unmap_pdu_iovec(cmd); if (!(cmd->flags & NVMET_TCP_F_INIT_FAILED) && cmd->rbytes_done == cmd->req.transfer_len) { if (queue ->data_digest) { nvmet_tcp_prep_recv_ddgst(cmd); return 0 ; } nvmet_req_execute(&cmd->req); } nvmet_prepare_receive_pdu(queue ); return 0 ; }

nvmet_bdev_execute_rw 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 static void nvmet_bdev_execute_rw (struct nvmet_req *req) int sg_cnt = req->sg_cnt; struct bio *bio ; struct scatterlist *sg ; sector_t sector; int op, op_flags = 0 , i; if (!req->sg_cnt) { nvmet_req_complete(req, 0 ); return ; } if (req->cmd->rw.opcode == nvme_cmd_write) { op = REQ_OP_WRITE; op_flags = REQ_SYNC | REQ_IDLE; if (req->cmd->rw.control & cpu_to_le16(NVME_RW_FUA)) op_flags |= REQ_FUA; } else { op = REQ_OP_READ; } if (is_pci_p2pdma_page(sg_page(req->sg))) op_flags |= REQ_NOMERGE; sector = le64_to_cpu(req->cmd->rw.slba); sector <<= (req->ns->blksize_shift - 9 ); if (req->data_len <= NVMET_MAX_INLINE_DATA_LEN) { bio = &req->b.inline_bio; bio_init(bio, req->inline_bvec, ARRAY_SIZE(req->inline_bvec)); } else { bio = bio_alloc(GFP_KERNEL, min(sg_cnt, BIO_MAX_PAGES)); } bio_set_dev(bio, req->ns->bdev); bio->bi_iter.bi_sector = sector; bio->bi_private = req; bio->bi_end_io = nvmet_bio_done; bio_set_op_attrs(bio, op, op_flags); for_each_sg(req->sg, sg, req->sg_cnt, i) { while (bio_add_page(bio, sg_page(sg), sg->length, sg->offset) != sg->length) { struct bio *prev = bio = bio_alloc(GFP_KERNEL, min(sg_cnt, BIO_MAX_PAGES)); bio_set_dev(bio, req->ns->bdev); bio->bi_iter.bi_sector = sector; bio_set_op_attrs(bio, op, op_flags); bio_chain(bio, prev); submit_bio(prev); } sector += sg->length >> 9 ; sg_cnt--; } submit_bio(bio); }

nvmet_bio_done BIO全部下发结束后,触发bi_end_io,也就是nvmet_bio_done函数,函数一路到最后会触发nvmet_tcp_queue_response函数,表示该nvme命令处理完毕,需要回传结果了

1 2 3 4 5 6 7 8 9 static void nvmet_bio_done (struct bio *bio) struct nvmet_req *req = nvmet_req_complete(req, blk_to_nvme_status(req, bio->bi_status)); if (bio != &req->b.inline_bio) bio_put(bio); }

1 2 3 4 5 6 void nvmet_req_complete (struct nvmet_req *req, u16 status) __nvmet_req_complete(req, status); percpu_ref_put(&req->sq->ref); } EXPORT_SYMBOL_GPL(nvmet_req_complete);

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 static void __nvmet_req_complete(struct nvmet_req *req, u16 status){ if (!req->sq->sqhd_disabled) nvmet_update_sq_head(req); req->cqe->sq_id = cpu_to_le16(req->sq->qid); req->cqe->command_id = req->cmd->common.command_id; if (unlikely(status)) nvmet_set_error(req, status); trace_nvmet_req_complete(req); if (req->ns) nvmet_put_namespace(req->ns); req->ops->queue_response(req); }

1 2 3 4 5 6 7 8 9 10 static void nvmet_tcp_queue_response (struct nvmet_req *req) struct nvmet_tcp_cmd *cmd = container_of(req, struct nvmet_tcp_cmd, req); struct nvmet_tcp_queue *queue =queue ; llist_add(&cmd->lentry, &queue ->resp_list); queue_work_on(cmd->queue ->cpu, nvmet_tcp_wq, &cmd->queue ->io_work); }

nvmet_tcp_try_send_one 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 static int nvmet_tcp_try_send_one (struct nvmet_tcp_queue *queue , bool last_in_batch) struct nvmet_tcp_cmd *cmd =queue ->snd_cmd; int ret = 0 ; if (!cmd || queue ->state == NVMET_TCP_Q_DISCONNECTING) { cmd = nvmet_tcp_fetch_cmd(queue ); if (unlikely(!cmd)) return 0 ; } if (cmd->state == NVMET_TCP_SEND_DATA_PDU) { ret = nvmet_try_send_data_pdu(cmd); if (ret <= 0 ) goto done_send; } if (cmd->state == NVMET_TCP_SEND_DATA) { ret = nvmet_try_send_data(cmd, last_in_batch); if (ret <= 0 ) goto done_send; } if (cmd->state == NVMET_TCP_SEND_DDGST) { ret = nvmet_try_send_ddgst(cmd); if (ret <= 0 ) goto done_send; } if (cmd->state == NVMET_TCP_SEND_R2T) { ret = nvmet_try_send_r2t(cmd, last_in_batch); if (ret <= 0 ) goto done_send; } if (cmd->state == NVMET_TCP_SEND_RESPONSE) ret = nvmet_try_send_response(cmd, last_in_batch); done_send: if (ret < 0 ) { if (ret == -EAGAIN) return 0 ; return ret; } return 1 ; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 static struct nvmet_tcp_cmd *nvmet_tcp_fetch_cmd (struct nvmet_tcp_queue *queue ) queue ->snd_cmd = list_first_entry_or_null(&queue ->resp_send_list, struct nvmet_tcp_cmd, entry); if (!queue ->snd_cmd) { nvmet_tcp_process_resp_list(queue ); queue ->snd_cmd = list_first_entry_or_null(&queue ->resp_send_list, struct nvmet_tcp_cmd, entry); if (unlikely(!queue ->snd_cmd)) return NULL ; } list_del_init(&queue ->snd_cmd->entry); queue ->send_list_len--; if (nvmet_tcp_need_data_out(queue ->snd_cmd)) nvmet_setup_c2h_data_pdu(queue ->snd_cmd); else if (nvmet_tcp_need_data_in(queue ->snd_cmd)) nvmet_setup_r2t_pdu(queue ->snd_cmd); else nvmet_setup_response_pdu(queue ->snd_cmd); return queue ->snd_cmd; }

nvmet_setup_response_pdu 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 static void nvmet_setup_response_pdu (struct nvmet_tcp_cmd *cmd) struct nvme_tcp_rsp_pdu *pdu = struct nvmet_tcp_queue *queue =queue ; u8 hdgst = nvmet_tcp_hdgst_len(cmd->queue ); cmd->offset = 0 ; cmd->state = NVMET_TCP_SEND_RESPONSE; pdu->hdr.type = nvme_tcp_rsp; pdu->hdr.flags = 0 ; pdu->hdr.hlen = sizeof (*pdu); pdu->hdr.pdo = 0 ; pdu->hdr.plen = cpu_to_le32(pdu->hdr.hlen + hdgst); if (cmd->queue ->hdr_digest) { pdu->hdr.flags |= NVME_TCP_F_HDGST; nvmet_tcp_hdgst(queue ->snd_hash, pdu, sizeof (*pdu)); } }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 static int nvmet_try_send_response (struct nvmet_tcp_cmd *cmd, bool last_in_batch) u8 hdgst = nvmet_tcp_hdgst_len(cmd->queue ); int left = sizeof (*cmd->rsp_pdu) - cmd->offset + hdgst; int flags = MSG_DONTWAIT; int ret; if (!last_in_batch && cmd->queue ->send_list_len) flags |= MSG_MORE; else flags |= MSG_EOR; ret = kernel_sendpage(cmd->queue ->sock, virt_to_page(cmd->rsp_pdu), offset_in_page(cmd->rsp_pdu) + cmd->offset, left, flags); if (ret <= 0 ) return ret; cmd->offset += ret; left -= ret; if (left) return -EAGAIN; kfree(cmd->iov); sgl_free(cmd->req.sg); cmd->queue ->snd_cmd = NULL ; nvmet_tcp_put_cmd(cmd); return 1 ; }

至此,target端的工作结束,转回host端看host端try_recv的执行流程

host端 nvme_tcp_try_recv 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 static int nvme_tcp_try_recv (struct nvme_tcp_queue *queue ) struct socket *sock =queue ->sock; struct sock *sk = read_descriptor_t rd_desc; int consumed; rd_desc.arg.data = queue ; rd_desc.count = 1 ; lock_sock(sk); queue ->nr_cqe = 0 ; consumed = sock->ops->read_sock(sk, &rd_desc, nvme_tcp_recv_skb); release_sock(sk); return consumed; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 static int nvme_tcp_recv_skb (read_descriptor_t *desc, struct sk_buff *skb, unsigned int offset, size_t len) struct nvme_tcp_queue *queue = size_t consumed = len; int result; while (len) { switch (nvme_tcp_recv_state(queue )) { case NVME_TCP_RECV_PDU: result = nvme_tcp_recv_pdu(queue , skb, &offset, &len); break ; case NVME_TCP_RECV_DATA: result = nvme_tcp_recv_data(queue , skb, &offset, &len); break ; case NVME_TCP_RECV_DDGST: result = nvme_tcp_recv_ddgst(queue , skb, &offset, &len); break ; default : result = -EFAULT; } if (result) { dev_err(queue ->ctrl->ctrl.device, "receive failed: %d\n" , result); queue ->rd_enabled = false ; nvme_tcp_error_recovery(&queue ->ctrl->ctrl); return result; } } return consumed; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 static int nvme_tcp_recv_pdu (struct nvme_tcp_queue *queue , struct sk_buff *skb, unsigned int *offset, size_t *len) struct nvme_tcp_hdr *hdr ; char *pdu = queue ->pdu; size_t rcv_len = min_t (size_t , *len, queue ->pdu_remaining); int ret; ret = skb_copy_bits(skb, *offset, &pdu[queue ->pdu_offset], rcv_len); if (unlikely(ret)) return ret; queue ->pdu_remaining -= rcv_len; queue ->pdu_offset += rcv_len; *offset += rcv_len; *len -= rcv_len; if (queue ->pdu_remaining){ return 0 ; } hdr = queue ->pdu; if (queue ->hdr_digest) { ret = nvme_tcp_verify_hdgst(queue , queue ->pdu, hdr->hlen); if (unlikely(ret)) return ret; } if (queue ->data_digest) { ret = nvme_tcp_check_ddgst(queue , queue ->pdu); if (unlikely(ret)) return ret; } switch (hdr->type) { case nvme_tcp_c2h_data: return nvme_tcp_handle_c2h_data(queue , (void *)queue ->pdu); case nvme_tcp_rsp: nvme_tcp_init_recv_ctx(queue ); return nvme_tcp_handle_comp(queue , (void *)queue ->pdu); case nvme_tcp_r2t: nvme_tcp_init_recv_ctx(queue ); return nvme_tcp_handle_r2t(queue , (void *)queue ->pdu); default : dev_err(queue ->ctrl->ctrl.device, "unsupported pdu type (%d)\n" , hdr->type); return -EINVAL; } }

1 2 3 4 5 6 7 8 static void nvme_tcp_init_recv_ctx (struct nvme_tcp_queue *queue ) queue ->pdu_remaining = sizeof (struct nvme_tcp_rsp_pdu) + nvme_tcp_hdgst_len(queue ); queue ->pdu_offset = 0 ; queue ->data_remaining = -1 ; queue ->ddgst_remaining = 0 ; }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 static int nvme_tcp_handle_comp (struct nvme_tcp_queue *queue , struct nvme_tcp_rsp_pdu *pdu) struct nvme_completion *cqe = int ret = 0 ; if (unlikely(nvme_tcp_queue_id(queue ) == 0 && cqe->command_id >= NVME_AQ_BLK_MQ_DEPTH)) nvme_complete_async_event(&queue ->ctrl->ctrl, cqe->status, &cqe->result); else ret = nvme_tcp_process_nvme_cqe(queue , cqe); return ret; }

nvme_tcp_process_nvme_cqe 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 static int nvme_tcp_process_nvme_cqe (struct nvme_tcp_queue *queue , struct nvme_completion *cqe) struct request *rq ; rq = blk_mq_tag_to_rq(nvme_tcp_tagset(queue ), cqe->command_id); if (!rq) { dev_err(queue ->ctrl->ctrl.device, "queue %d tag 0x%x not found\n" , nvme_tcp_queue_id(queue ), cqe->command_id); nvme_tcp_error_recovery(&queue ->ctrl->ctrl); return -EINVAL; } nvme_end_request(rq, cqe->status, cqe->result); queue ->nr_cqe++; return 0 ; }

1 2 3 4 5 6 7 8 9 10 11 12 static inline void nvme_end_request (struct request *req, __le16 status, union nvme_result result) struct nvme_request *rq = rq->status = le16_to_cpu(status) >> 1 ; rq->result = result; nvme_should_fail(req); blk_mq_complete_request(req); }

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 void nvme_complete_rq (struct request *req) blk_status_t status = nvme_error_status(nvme_req(req)->status); trace_nvme_complete_rq(req); if (nvme_req(req)->ctrl->kas) nvme_req(req)->ctrl->comp_seen = true ; if (unlikely(status != BLK_STS_OK && nvme_req_needs_retry(req))) { if ((req->cmd_flags & REQ_NVME_MPATH) && nvme_failover_req(req)) return ; if (!blk_queue_dying(req->q)) { nvme_retry_req(req); return ; } } nvme_trace_bio_complete(req, status); blk_mq_end_request(req, status); } EXPORT_SYMBOL_GPL(nvme_complete_rq);

返回到最初的nvme_user_cmd函数中

nvme_passthru_end 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 static void nvme_passthru_end (struct nvme_ctrl *ctrl, u32 effects) if (effects & NVME_CMD_EFFECTS_LBCC) nvme_update_formats(ctrl); if (effects & (NVME_CMD_EFFECTS_LBCC | NVME_CMD_EFFECTS_CSE_MASK)) { nvme_unfreeze(ctrl); nvme_mpath_unfreeze(ctrl->subsys); mutex_unlock(&ctrl->subsys->lock); nvme_remove_invalid_namespaces(ctrl, NVME_NSID_ALL); mutex_unlock(&ctrl->scan_lock); } if (effects & NVME_CMD_EFFECTS_CCC) nvme_init_identify(ctrl); if (effects & (NVME_CMD_EFFECTS_NIC | NVME_CMD_EFFECTS_NCC)) nvme_queue_scan(ctrl); }

至此一条ioctl write命令结束